Works & Projects

A screen shot showing the user interface of custom iPhone App built with the ARKit Library and the detected planes

Students collecting plane detection data using custom iPhone App built with the ARKit Library

The result of the Plane Matching algorithm, which matches the planes detected by the app (yellow) to the segments in the provided floor plan (black). Green and red segments represent segments used by the RANSAC algorithm to generate this match.

A screen shot showing the user interface of custom iPhone App built with the ARKit Library and the detected planes

Two-Stage Plane-Based Indoor Localization with ARKit and Floor Plans

(Current Project)

Enhancing the accuracy and robustness of localization and camera pose estimation in unexplored indoor environments by leveraging smartphone sensors and publicly available floor plans, with a particular focus in the need of Blind and Visually Impaired (BVI) individuals.

Mentored 3 talented high school students in the problem formulation and initial data collection stage.

Illustration of landmark to instruction process from "Less is More: Generating Grounded Navigation Instructions from Landmarks"

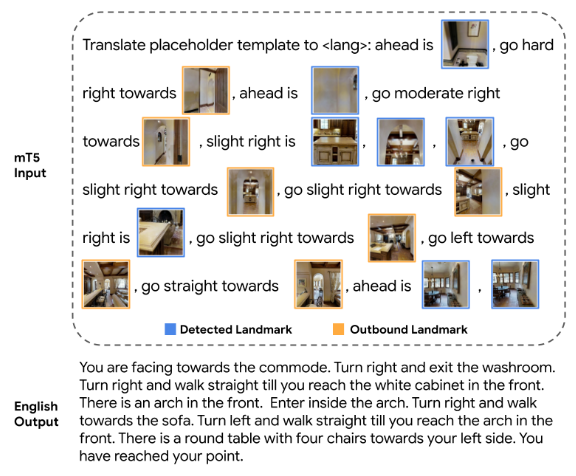

Illustration of Marky-mT5's input and output, from the "Less is More: Generating Grounded Navigation Instructions from Landmarks" paper

Illustration of tactile guide detection from the MP3D dataset

Illustration of landmark to instruction process from "Less is More: Generating Grounded Navigation Instructions from Landmarks"

Blind Embodied AI for Navigation Instruction Evaluation

Link to Project Report

Despite the popularity of assistive technology research for indoor navigation, there is no existing solution that generates navigation instructions designed for Blind and Visually Impaired (BVI) people. To adapt recent work in navigation instruction generation for the BVI community, we constructed a silver dataset by adding distance, tactile guide, and landmark information to the instructions in the Room- across-Room (RxR) dataset. In addition, we designed experiments for exploring the correlation between blind embodied navigation agents and BVI people in the point goal navigation setting. This project proposes a novel problem of adapting navigation instruction generation for the BVI community and initializes a novel discussion topic on blind-embodied-agent-BVI correlation.

Contactless Vitals Measurement

Researched the effects of background and lighting augmentation on the MTTS-CAN model with Andrey Risukhin, Xin Liu from the University of Washington, and Daniel McDuff from Microsoft. The original paper: Multi-Task Temporal Shift Attention Networks for On-Device Contactless Vitals Measurement.

Facial Generation / Transformation

Mixed Style Face Transformation with StyleGAN2 and pixel2style2pixel (Github)

Object Detection

Transfer Learning on the YOLOv5-s object detection model

2D-Range LiDAR Object Detection

Detect traffic cones via template matching using 2D-Range LiDAR data

Mobile & Web Application

A second-hand trading platform designed for college students to reduce waste and living costs. Made with React/React Native and Firebase. Demo Video